Table of contents

Kubernetes Networking Model

Kubernetes is a system that helps manage containers, which are like little packages of software. Within Kubernetes, all of the containers belong to groups called "Pods". Each Pod has its own unique IP address within the whole Kubernetes cluster, so they can all communicate with each other without any extra work.

This means that you don't need to worry about connecting different Pods together or mapping container ports to host ports. It creates a very easy-to-understand model where Pods can be treated like virtual machines or physical hosts when it comes to things like port allocation, service discovery, load balancing, and migration.

When it comes to networking in Kubernetes, there are a few requirements that must be met. First, Pods must be able to communicate with all other Pods on any node without needing to use NAT. Second, agents on a node (like system daemons) must be able to communicate with all Pods on that node.

Kubernetes IP addresses exist at the Pod scope - this means that containers within a Pod share their network namespaces, including their IP address and MAC address. Containers within a Pod can all reach each other's ports on localhost, but they must coordinate their port usage just like processes in a virtual machine.

The way that this networking is implemented depends on the particular container runtime being used. In some cases, it's possible to request ports on the Node itself which forward traffic to your Pod (called host ports), but this is not a common operation.

Kubernetes networking is focused on four main concerns:

Containers within a Pod use loopback networking to communicate with each other.

Cluster networking to provide communication between different Pods.

The Service API, lets you expose an application running in a Pod to be reachable from outside your cluster.

Ingress, which provides additional functionality for exposing HTTP applications, websites, and APIs.

You can also use Services to publish services only for consumption inside your cluster. The Connecting Applications with Services tutorial provides a great hands-on example to learn more about Services and Kubernetes networking.

Cluster Networking is a resource that explains how to set up networking for a Kubernetes cluster, as well as provides an overview of the technologies involved.

How Actually K8s Networking?

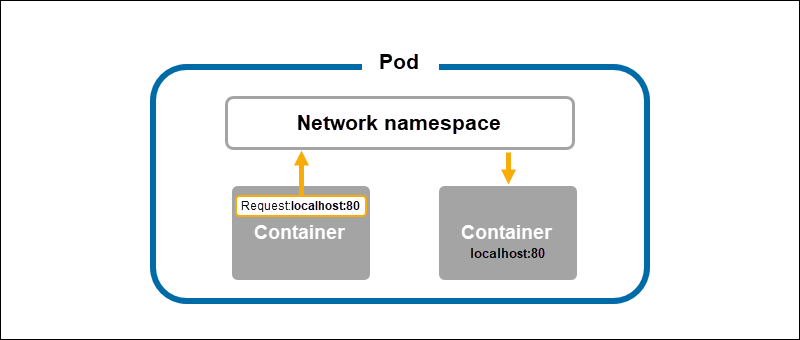

Container-to-Container Communication

When two (or more) containers reside on the same pod, the containers share a network namespace.

A hidden container called the Pause container holds the network namespace for a pod. The Pause container sets up the namespace, creating shared resources for all containers in a pod.

Due to the shared network namespace, containers in the same pod can communicate through localhost and port numbers. Each container in the same pod should have a unique communication port to avoid port conflicts.

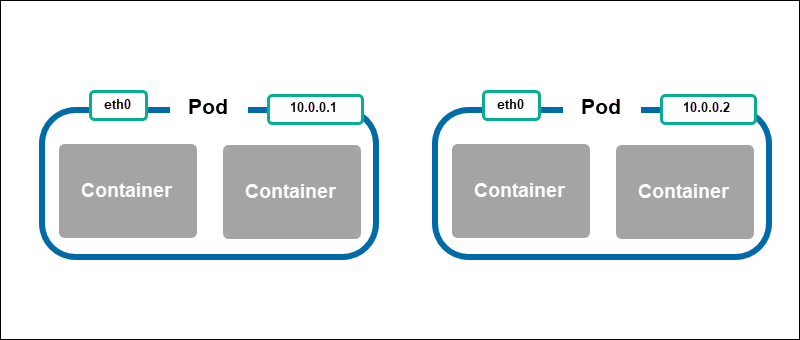

Pod-to-Pod Communication

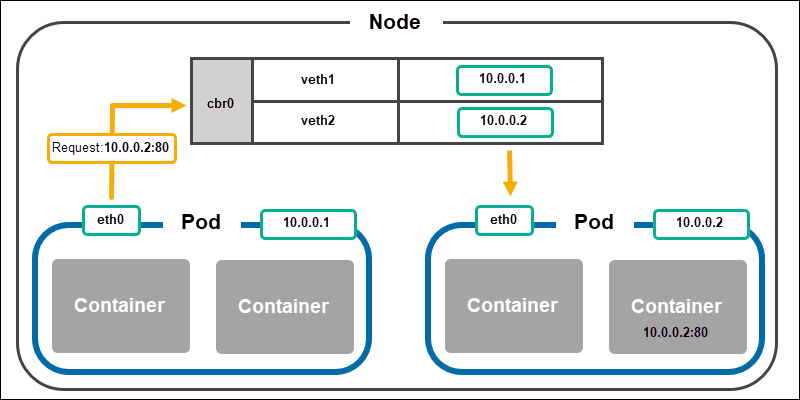

Every pod has an IP address and network namespace. Each pod has a virtual ethernet connection, which looks like a standard eth0 connection to the pod. The eth0 devices connect to the virtual ethernet device on the node.

The virtual ethernet device acts as a tunnel for the pod's network inside the node. The connection on the node's side is vethx, where x represents a pod's number (veth1, veth2, and so on).

When a pod sends a request to another pod, the request goes through the node's eth0 interface, which tunnels to the vethx interface.

Next, the request needs a way to connect to the receiving pod. The element that connects the two pod networks is the network bridge (cbr0), which resides on the node. The bridge connects all pods on a node.

When a request gets to the bridge, the pods check whether the IP address corresponds to their own, store the bridge's information, and accept the call. The bridge also forwards the confirmation of a successful request back to the original pod.

Once the request goes to the correct pod, the request resolves on the pod level.

Node-to-Node Communication

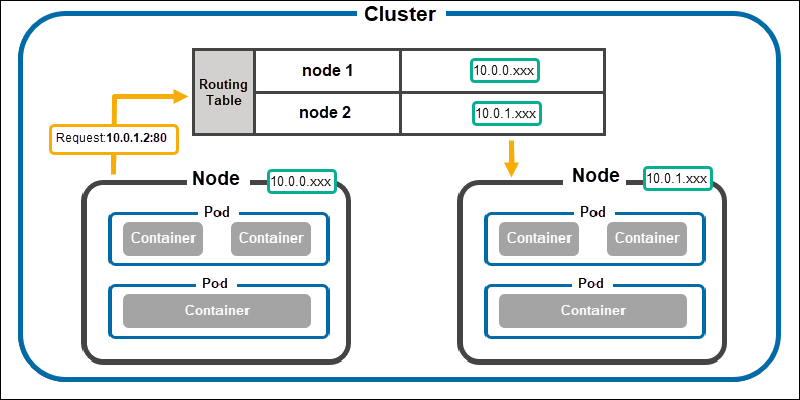

The bridge (cbr0) cannot resolve the request when two pods are on a different node. The communication goes up to the cluster level to establish node-to-node communication.

The process further varies on the cloud provider and networking plugins. A cluster stores a routing table with IP address ranges mapped to nodes in most cases.

The routing table checks the IP address range against mapped nodes when a bridge cannot resolve a request. The matched content maps to the adequate node, and the resolution continues on the node level.

Pod-to-Service Communication

A Kubernetes service maps a single IP address to a pod group to address the issue of a pod's changing address. The service creates a single endpoint, an immutable IP address and hostname, and forwards requests to a pod in that service.

The kube-proxy process runs in every node, mapping a service's virtual IP addresses to pod IP addresses. Services enable implementing load balancing techniques and exposing a deployment for external connections.

External-to-Service Communication

Kubernetes clusters have a specialized service for DNS resolution. A service automatically receives a domain name in the form <service>.<namespace>.svc.cluster.local. Exposing the service makes the cluster reachable from the outside world.

The DNS resolves the domain name request to the service's IP address. The kube-proxy process further resolves the service's IP address to a pod's IP address. Connecting and discovering a service's IP address is known as service discovery.

Services

let you expose an application running in your cluster behind a single outward-facing endpoint, even when the workload is split across multiple backends.

Ingress

Makes your HTTP (or HTTPS) network service available using a protocol-aware configuration mechanism that understands web concepts like URIs, hostnames, paths, and more. The Ingress concept lets you map traffic to different backends based on rules you define via the Kubernetes API.

Ingress-Controller

For an Ingress to work in your cluster, there must be an ingress controller running. You need to select at least one ingress controller and make sure it is set up in your cluster. This page lists common ingress controllers that you can deploy.

EndpointSlices

The EndpointSlice API is the mechanism that Kubernetes uses to let your Service scale to handle large numbers of backends and allows the cluster to update its list of healthy backends efficiently.

Network Policies

If you want to control traffic flow at the IP address or port level, NetworkPolicies allow you to specify rules for traffic flow within your cluster, as well as between Pods and the outside world. Your cluster must use a network plugin that supports NetworkPolicy enforcement.

DNS

Your workload can discover Services within your cluster using DNS; this page explains how that works.

CNI Plugins

CNI plugins are also essential to Kubernetes networking. CNI (Container Network Interface) plugins are responsible for configuring the network interfaces of containers within a Kubernetes cluster. There are many different CNI plugins available, each with their own set of features and capabilities.

Follow me for more Kubernetes blogs Yashwant Chaudhari

Linkedin : - https://www.linkedin.com/in/devops-yashwant/